There are quite a few possible solutions to this task.

The simplest one is to keep a "sliding window" buffer of the last  values seen and scan it after each new sample to determine the lowest and the highest value in the buffer. This obviously runs in

values seen and scan it after each new sample to determine the lowest and the highest value in the buffer. This obviously runs in  time, which is too slow for any larger test cases. Such a solution is expected to score about

time, which is too slow for any larger test cases. Such a solution is expected to score about  .

.

An easy improvement over the naïve solution is to cache the minimum and maximum and only scan the full buffer when a current extreme value is pushed out. While this still runs in  time in the worst case, it is much faster in many cases. Such a solution is expected to score about

time in the worst case, it is much faster in many cases. Such a solution is expected to score about  , as the larger test cases are engineered against this heuristic.

, as the larger test cases are engineered against this heuristic.

An asymptotic improvement is to build a binary tree that keeps the last  values in the leaves and the minimum and maximum of each subtree in the internal nodes. Then the global minimum and maximum can be updated in

values in the leaves and the minimum and maximum of each subtree in the internal nodes. Then the global minimum and maximum can be updated in  steps by replacing the oldest value in the buffer with the new one and updating the minima and maxima on the path from the updated leaf to the root of the tree and the overall running time is

steps by replacing the oldest value in the buffer with the new one and updating the minima and maxima on the path from the updated leaf to the root of the tree and the overall running time is  . Such a solution is expected to score full points.

. Such a solution is expected to score full points.

Another solution is to keep a "last seen" index for each possible sample value and scan this table after each new sample to determine the lowest and highest value seen no more than  samples ago. This obviously runs in

samples ago. This obviously runs in  time, where

time, where  is the size of the range of sample values. Such a solution is expected to score about

is the size of the range of sample values. Such a solution is expected to score about  .

.

Like in the "sliding window" case, we can cache the minimal and maximal values seen recently and use this information to reduce the scanning range. This reduces the worst-case running time to  and proves to be surprisingly efficient on average. Such a solution is expected to score about

and proves to be surprisingly efficient on average. Such a solution is expected to score about  , as the larger test cases are engineered against this heuristic.

, as the larger test cases are engineered against this heuristic.

The "last seen"-based solution can also be augmented with a binary tree. This time we need to keep only the maximum of each subtree, as this is sufficient to reduce the search for the highest and lowest value seen among the last  samples to

samples to  steps and thus the total running time to

steps and thus the total running time to  . Such a solution is expected to score about

. Such a solution is expected to score about  .

.

Yet another solution is to keep a "number of times seen among the last  " counter for each possible sample value in addition to the

" counter for each possible sample value in addition to the  -element "sliding window" buffer. Using the buffer, we can update the counters in constant time when a new sample arrives and an old one retires. Using the counters and the most recent sample

-element "sliding window" buffer. Using the buffer, we can update the counters in constant time when a new sample arrives and an old one retires. Using the counters and the most recent sample  , we can check in

, we can check in  steps if the values

steps if the values  account for all

account for all  samples for some

samples for some  . The total running time of this solution is

. The total running time of this solution is  . Such a solution is expected to score about

. Such a solution is expected to score about  .

.

An  solution could be written using a segment tree on the whole input sequence. Such a solution would be expected to score about

solution could be written using a segment tree on the whole input sequence. Such a solution would be expected to score about  .

.

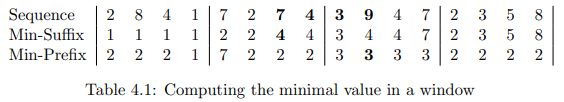

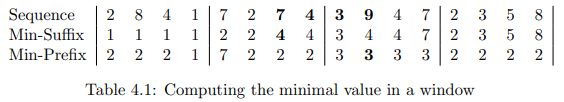

An optimal solution can be developed considering the prefixes and suffixes of  -element blocks of the input sequence. For each block, we can sweep the block from left to right and then from right to left to compute the minimal values for each prefix and suffix of the block, as shown in table 4.1 for the case

-element blocks of the input sequence. For each block, we can sweep the block from left to right and then from right to left to compute the minimal values for each prefix and suffix of the block, as shown in table 4.1 for the case  ,

,  . An arbitrary window consists of a suffix of one block and a prefix of the following one (shown in bold in the first row of the table). The minimal value of the window can be computed in constant time by taking the minimum of the min-suffix and the min-prefix corresponding to the suffix and prefix that make up the window (shown in bold in the second and third row). The maximal value can be computed similarly, which yields a solution that runs in

. An arbitrary window consists of a suffix of one block and a prefix of the following one (shown in bold in the first row of the table). The minimal value of the window can be computed in constant time by taking the minimum of the min-suffix and the min-prefix corresponding to the suffix and prefix that make up the window (shown in bold in the second and third row). The maximal value can be computed similarly, which yields a solution that runs in  time and

time and  space. Such a solution is expected to score full points. Actually, it would be sufficient to keep only two adjacent blocks in the memory at any time, and thus reduce the memory requirement to

space. Such a solution is expected to score full points. Actually, it would be sufficient to keep only two adjacent blocks in the memory at any time, and thus reduce the memory requirement to  .

.

Another optimal solution can be derived from the observation that after encountering a value  , any previously encountered value

, any previously encountered value  will not affect computing the maximum anymore. Therefore we can replace the naïve "sliding window" with a list of indices

will not affect computing the maximum anymore. Therefore we can replace the naïve "sliding window" with a list of indices  such that

such that  and

and  . When a new value

. When a new value  arrives, the list can be updated as follows:

arrives, the list can be updated as follows:

- If

, remove

, remove  from the front of the list.

from the front of the list.

- While

and

and  , remove

, remove  from the back of the list.

from the back of the list.

- Append

to the back of the list.

to the back of the list.

It should be obvious that the preceding steps guarantee that at any time the value  is the maximum among the last

is the maximum among the last  samples. While the algorithm can spend

samples. While the algorithm can spend  operations to process the arrival of a sample

operations to process the arrival of a sample  , the total cost of processing all

, the total cost of processing all  samples can't exceed

samples can't exceed  , as each index enters and leaves the list only once. Maintaining the minimal value among the last

, as each index enters and leaves the list only once. Maintaining the minimal value among the last  samples can be done similarly, or just by keeping another list that is updated based on the value

samples can be done similarly, or just by keeping another list that is updated based on the value  for each sample

for each sample  . Such a solution using

. Such a solution using  time and

time and  memory is expected to score full points.

memory is expected to score full points.

The last solution has the additional benefit that it is easily modifiable for the case when silence is defined as at least  samples where the noise level does not exceed the threshold. Also, with a bit more careful programming, we could get away using only

samples where the noise level does not exceed the threshold. Also, with a bit more careful programming, we could get away using only  memory. This could be useful when analyzing long recordings containing potentially long sections of silence on a device with limited memory.

memory. This could be useful when analyzing long recordings containing potentially long sections of silence on a device with limited memory.

Comments